The Evolution of RAG: From Traditional RAG to Agentic RAG

If you’ve been following the LLM world since late 2022, “RAG” (Retrieval-Augmented Generation) is probably one of the first acronyms you learned. It was the go-to solution to stop models from hallucinating by grounding answers in real, up-to-date documents. Fast forward to late 2025: LLMs are smarter, reasoning is stronger, and the classic RAG pipeline is starting to feel… basic. The next evolutionary step is already here, and it’s called Agentic RAG. In this post, we’ll walk through what traditional RAG is, why it’s hitting its limits, and the evolution from traditional RAG to Agentic RAG for production-grade LLM applications.

What Is Traditional RAG?

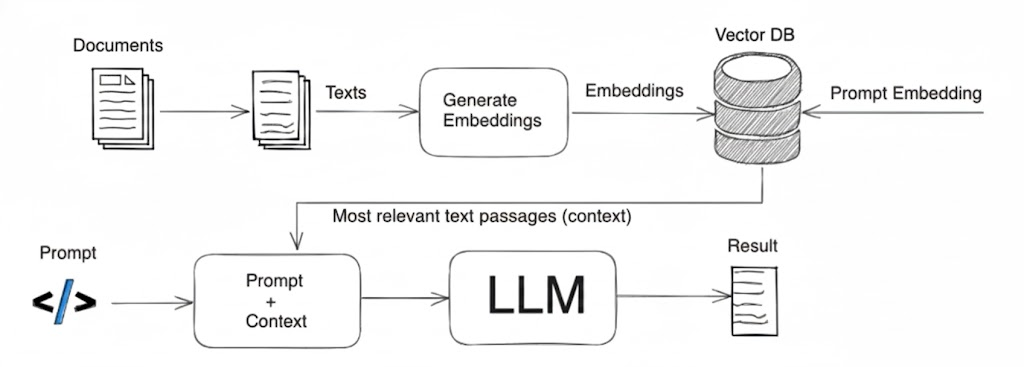

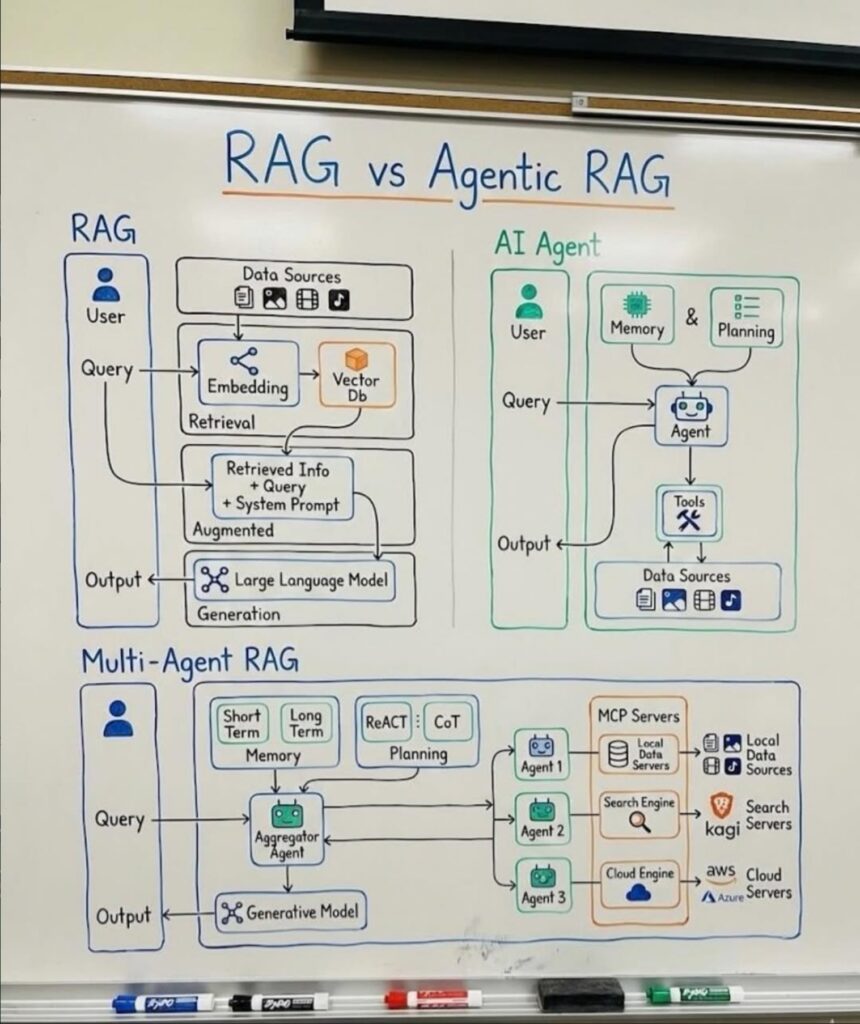

At its core, traditional RAG is a three-step linear pipeline:

- Chunk & Embed → Split your documents into small pieces and turn them into vectors.

- Retrieve → When a user asks a question, convert the question into a vector, find the most similar chunks in your vector database (cosine similarity or MMR).

- Generate → Stuff the retrieved chunks into the LLM prompt and let it write the final answer.

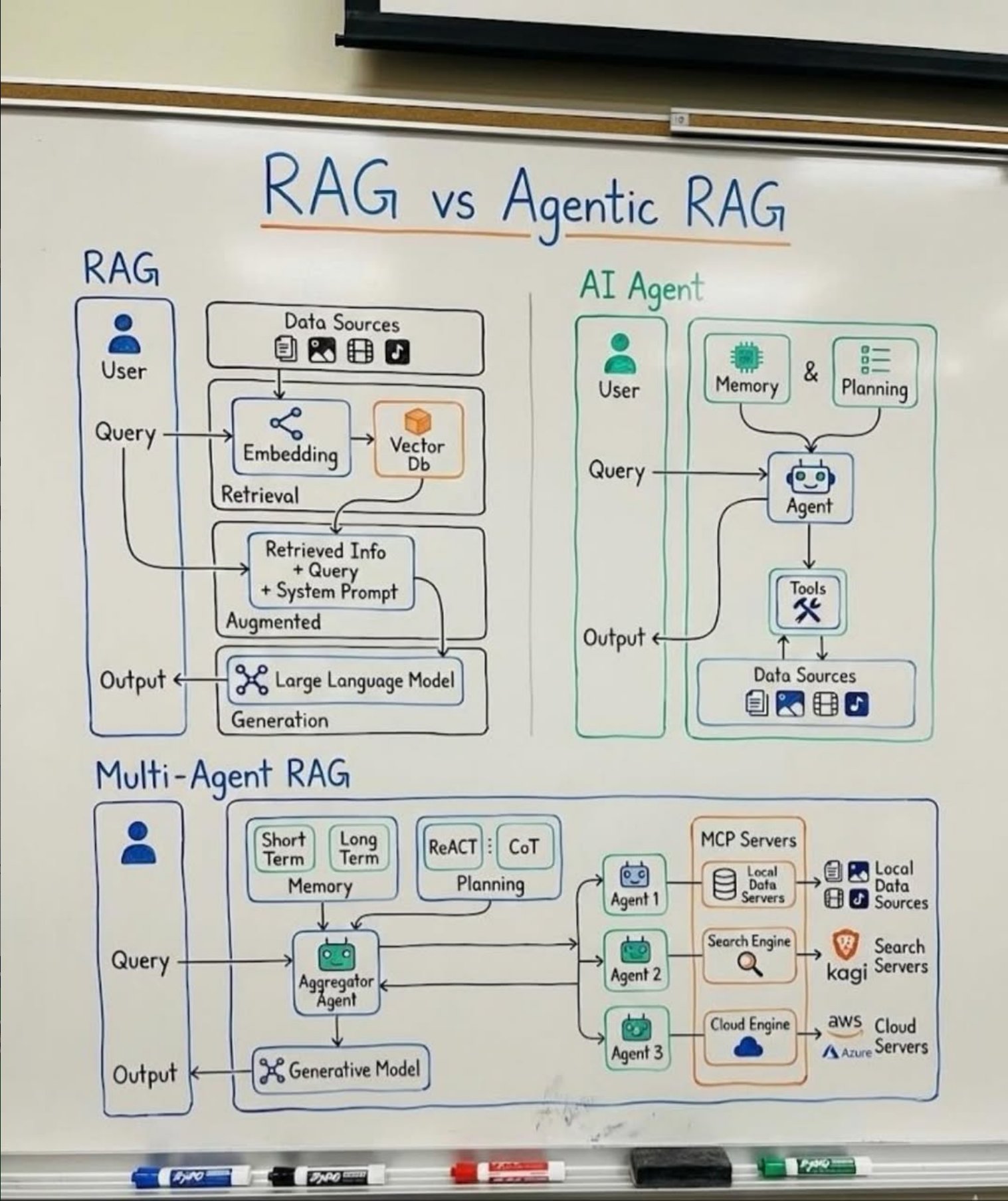

Here’s the classic diagram everyone has seen a thousand times:Traditional RAG Flow

Embedding → Retrieval → Generation (one-shot, no reasoning, no tools)It works great for simple Q&A over a static knowledge base, but it breaks down fast when queries become multi-hop, ambiguous, or require external verification.

Agentic RAG: When Retrieval Becomes Intelligent

Agentic RAG turns the rigid pipeline into a dynamic, reasoning-driven agent workflow. Instead of “retrieve once and pray,” an AI agent now actively decides:

- Do I have enough information?

- Should I rephrase the query for better retrieval?

- Do I need to call a search API, a calculator, or an internal tool?

- Should I break the question into sub-questions?

- Can I verify the retrieved facts against another source?

The agent plans, executes tools, reflects, and iterates until it’s confident in the answer.Here’s the modern Agentic RAG architecture (2025 version):Agentic RAG Architecture

Agent → Planning → Memory → Tool Use (Search, APIs, DBs) → Reasoning → Final AnswerKey components you’ll see in real-world Agentic RAG systems:

- Long-term & short-term memory

- Planner / Reasoner

- Tool calling (web search, code execution, internal APIs)

- Multi-agent orchestration (optional but increasingly common)

- Self-correction & critique loops

Research from M$ shown that Up to 40% better relevance for complex queries with new agentic retrieval engine

Real-World Tools & Frameworks Using Agentic RAG (2025)

You don’t have to build everything from scratch. Here are the platforms that have already gone full agentic:

- LlamaIndex – Workflows + Agentic Retrieval (now the default recommendation over basic RAG)

- LangGraph (by LangChain) – State machines and multi-agent supervisor patterns

- CrewAI & AutoGen – Multi-agent collaboration with built-in retrieval agents

- Haystack 2.x – Agent nodes + tool calling pipelines

- Glean – Enterprise Agentic RAG platform (used by Airbnb, Databricks, etc.)

- Perplexity Pro / Sonar – The consumer-facing example everyone actually uses daily

- Microsoft Copilot Studio + Semantic Kernel – Agentic flows in the enterprise

- OpenAI Assistants API + function calling – The original “agentic” interface (still widely used)

When Should You Upgrade to Agentic RAG?

Stick with traditional RAG if:

- Your data rarely changes

- Queries are simple and single-hop

- Latency must be <300ms

Switch to Agentic RAG when:

- Users ask follow-up or multi-step questions

- You need up-to-date information (web search integration)

- Accuracy and verifiability are critical (legal, medical, finance)

- You’re building internal copilots or customer-facing assistants

Final Thoughts

Traditional RAG was the training wheels that got us from “hallucinating chatbot” to “somewhat reliable assistant.”

Agentic RAG is the moment we take the training wheels off.In 2026 and beyond, calling something “RAG” without agents, tools, and reasoning loops will feel like calling a modern car “a wagon with an engine” — technically correct, but missing the point.Start experimenting with agentic patterns today. Your users (and your stakeholders) will notice the difference tomorrow.